Bias and b/s would be baked-into the proposed scheme

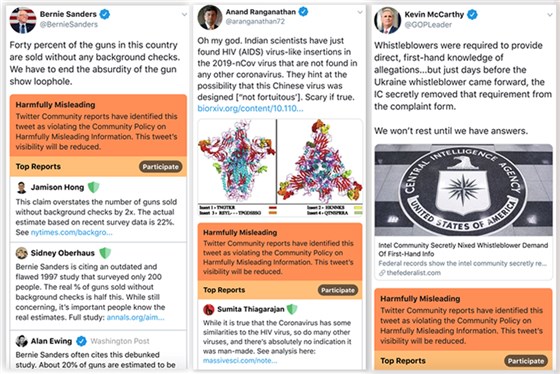

Recently NBC news came into a leaked demo, which was confirmed by Twitter, of a system to allow verified users to correct and flag misinformation, directly below the tweet.

Any tweets flagged as misinformation will have their visibility reduced across the system.

“Verified users” means those hallowed “blue checks”, who will be tasked, as per an open question I posed recently, to arbitrate truth on the microblogging platform.

This is problematic for a number of reasons. For starters, there is no formal, defined policy for who actually becomes anointed with a “blue checkmark”.

In 2019, The Intercept reported on how Twitter was withholding verification from primary challengers, subtly tipping the scales in favour of the incumbents. As more visibility, and worse, ability to impact visibility on others, accrues to the verified users, the asymmetry becomes more pronounced. It begins to look, dare I say it, rigged.

Ostensibly, accounts are verified as proven to be who they say they are. easyDNS once reported a fake easyDNS support account to Twitter, and after awhile they took the imposter account down. We then asked, being a 20-year old internet infrastructure supplier with a large body of downstream users, should we get a verified account?

The answer at the time (over two years ago) was that verified accounts were no longer being handed out while they devise a new policy.

We noted at the time that Thoughts of a Dog is Twitter verified so I guess Thoughts of a Dog will soon have the ability to mark tweets as disinformation.

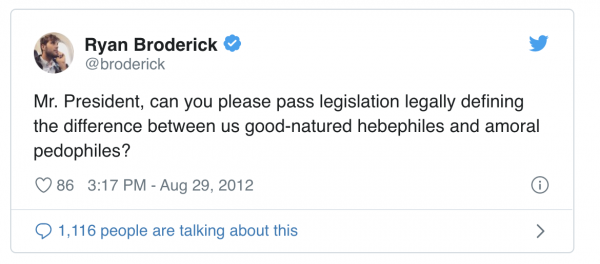

Ryan Broderick, a self-professed hebephile and doxer, who authored a factually incorrect story (a.k.a misinformation) that led to Zerohedge’s suspension from Twitter, has a blue check. He’s a verified journalist (works for Buzzfeed) who is exactly who Twitter is saying would be able to provide “misinformation” flags to other people’s tweets.

Inevitable questions arise…

Who will check the checkers?

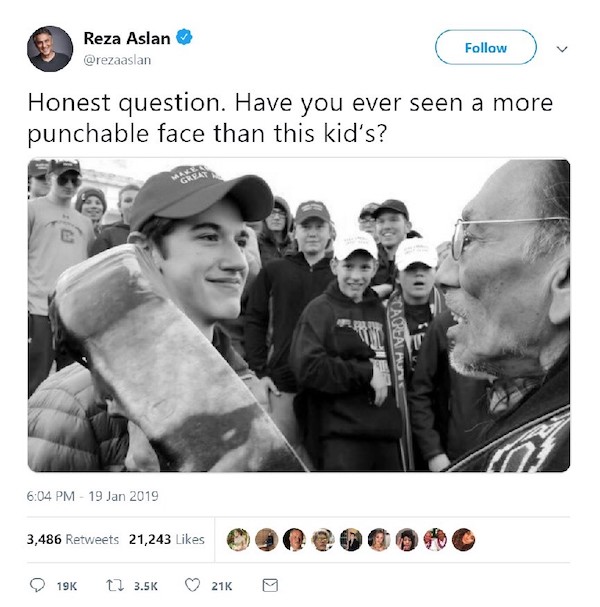

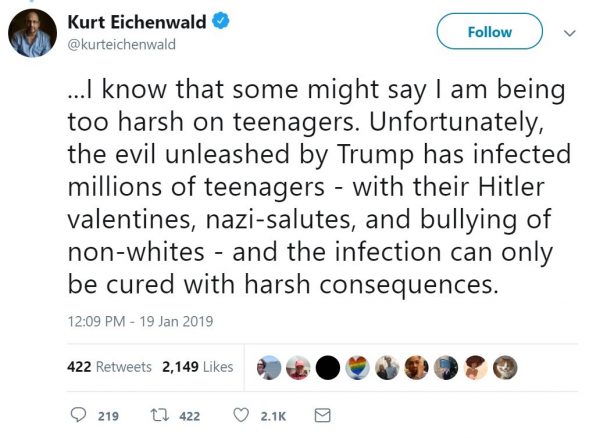

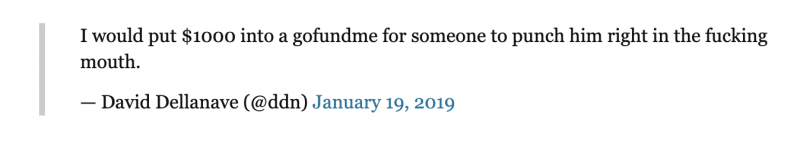

Blue checks routinely ignite and fuel swarms, pile-ons and twitch-hunts. They use pretzel logic to demonize something they personally dislike while excusing identical behaviour of their own ilk. Take the case of the Convington kids who were utterly demonized by a legion of blue checks, whose reaction ranged from the ole “literally Hitler” trope to inciting physical violence against them…

What is the appeals process?

I mean other than all the blue checks going back and deleting their tweets and scrubbing their timelines after they are caught off-guard overreacting and being dead-wrong about something (reminder, the Covington Kids were completely and totally exonerated for what they were accused of, which was I think, smirking while wearing a MAGA hat)

And we’re supposed to start taking the word of this crew to objectively sort out what is true from what is false or misleading and do it in a way that doesn’t inject their own biases and personal / corporate agendas into the mix?

Guaranteed that every single Donald Trump tweet will be marked as misinformation is baked-in. Trump at least has social capital to push back, but what about everybody else who isn’t a duly elected head-of-state, the non-billionaire, the politically unconnected plebeians who’s tweets are marked as “misinformation” by pearl clutching, thin skinned blue checks? Will there be an “appeal” button? A way to plead your case to the Twitter in-group who has pronounced judgement on truth?

It all seems very imperious and grandiose to me, a level of arbitrary power imbalance that typically enrages progressives.

It is not my intent to demonize all blue checks. There’s plenty of them who do good journalism and thoughtful work (this is the obligatory “I have close friends who are blue checks!”) My point here is that as a class of users across the platform, they are just as susceptible to hysteria and groupthink as the rest of us. Conferring additional moderation capabilities to them on the basis of class membership is a mistake.

Here’s an idea…

As I’ve said before and in my book, as a libertarian-minded person I don’t feel like government regulation would do anything to improve the situation. However, so far the big tech platforms attempts at self-regulating have been rather ham fisted.

The other day I had coffee with a colleague and among the things we discussed (maybe ruminated is a better word), was that in the future, reputations would become paramount as “the centre will not hold” (centralized systems lose cohesion). However, nobody yet has come up with a reputation system that isn’t biased and flawed.

I don’t have an answer to this, but in the case of Twitter, how about this:

- If I choose to follow somebody, just show me their fscking tweets already.

- If somebody else has an issue with somebody’s tweets, I don’t know, maybe there could be some sort of “reply” function or something where somebody could rebut the contents of a tweet.

In other words, and this applies to all tech platforms, don’t worry about me. Don’t worry about anybody. Us rubes can figure out how to sift through b/s and make our own determinations on what passes the smell test.

Instead worry about your systems. Worry about your security. Worry about protecting our personal data from being breached and leaked. Don’t worry about our minds being contaminated with non-conforming ideas.

My first thought when I saw news of this proposal from Twitter was Netflix nuking the rating system when Amy Schumer pissed off a sizable portion of the Netflix customer base, and henceforth got her Netflix Original special down-voted into oblivion.

“Anything flagged as misinformation will have THEIR visibility reduced across the system.”

*its

You wrote a book?

I wrote two. It’s called “editors”.

Why is it surprising what Twitter is planning to do?

YouTube has done a similar thing with their automated takedown notice long time ago and are still getting away with it, despite the outcry of community and legitimate authors who are getting copyright flagged for their own content.

Corporations will continue to do what’s good for their business, and only that, because that’s their only obligation (towards shareholders). Why people think some corporations are benevolent is beyond me…

You are correct that corporations are out for their shareholders, however, I have remarked many times previously:

When they act like this they incentivize their own disruption, which will be a negative for shareholders.

In more general terms, this is a dichotomy between serving shareholder interests in the near-term vs the long term. I’ve written at length about this over on Guerrilla Capitalism, starting here.